Date tables in themselves are nothing new. Every data warehouse and business intelligence project includes one of those and typically it is one of the first tables which we find on our list of implementation tasks. Surprisingly, however, I am finding that in many implementations the omnipresent date table cannot support some analytics. In this post I will outline a few considerations which we need to take into account when building date tables in general, and some which apply specifically to SSAS.

UPDATE (9th October 2011): If you are a PowerPivot user (or would not mind using an OData feed) there is a simple way to get a date/calendar table as there is a free one available on the Azure DataMarket. I recently blogged about this new project in my Introducing Project DateStream (CodePlex) post.

UPDATE 2 (16 October 2011): John Simon recently published a nice T-SQL script which can be used to create and populate a date table on his blog. Have a look if you need one!

Grain, Key and Format

In general, date tables are on a daily/date grain. This is because the lowest level of reporting we need to do is very often on full days. Even if we need to analyse on time intervals smaller than a day, we should still build a Date and a Time table to support this. Why? Because if we pack it all in the same table we end up with too many rows and this is something we typically want to avoid in any dimension. As we have a row per day, one of our columns is inevitably based on the calendar date. It is also typically the dimension key. As with all other dimension tables, we need to be able to join the table to our fact tables and this is done on our key. To keep our fact tables as small as possible, we need the smallest possible data type for our keys, and in the case of a Date this is usually an integer. However, unlike with other dimensions, we do not have to have a meaningless integer for our surrogate key because it does not give us any advantage. It is very easy to convert between a datetime and an int and in SQL Server datetime is 8 bytes, while int is 4. In SQL 2008+ we have another type suitable for our date table key – date, which is 3 bytes. However, for compatibility reasons, it is frequently better to stick to an int. Luckily, we also have the very convenient ANSI/ISO 8601 standard to follow in order to make our integer dates easier to work with. If we do encode them with the YYYYMMDD format, (thus the 15th of January 2011 becomes 20110115), we can easily sort on the column and compare two dates with the standard > and < operators. Additionally, we also escape the ambiguities around date formats in the USA and the rest of the world.

Attributes

Building the date key column is a good start but to allow for better analytical capabilities we need to add other attributes. As in most cases we need to analyse our data not only on days, but also on larger intervals (e.g. Months, Quarters and Years), we need to add columns for each of them. A typical requirement is to support both Financial and Calendar date hierarchies. As with dimensions it is ok to have many columns, we can add one for each combination of the type of calendar and period (e.g. FinancialMonth and CalendarMonth, FinancialQuarter and CalendarQuarter, etc.). Other interesting attributes we may want to have materialised in the table are the likes of Weekends, Public Holidays (even though we need to maintain these), Solstice/Equinox and PhaseOfMoon (thanks for Thomas Kejser for mentioning these in an online conversation recently). Basically, for a fully functional date table we need to consider anything which could be of business value without going to extremes.

Of course, when working with SSAS we also want to have an integer key for each attribute and possibly a common name for it. This multiplies the number of columns we need by two – one Id and one Name column for each of the attributes we have decided to implement. Some attention needs to be spared when determining the formats of each attribute Id. If we are to be building a hierarchy out of a collection of attributes we want those to form a nice natural hierarchy. That is – each child member should not have two parents with different Ids. To illustrate the concept, let’s consider two different months – January 2011 and January 2012. While they are the first month of the calendar year, they represent different entities when reporting. We do not aggregate data to January only, but to January in each respective year. Therefore, if we were to write a SQL query to get data for January 2011 and our Ids for both of these members are 1, we would also need to use the Year attribute to get the values we need. Effectively, our hierarchy would have two children with identical Ids of 1 with different parents (with Ids of 2011 and 2012). This is a particular problem when working with SSAS as it definitely prefers unique Ids for each separate attribute member. There is a very easy solution – we can include both the Year and the Month number in the MonthId. In our case, January 2011 gets an Id of 201101 and January 2012 – 201201. Now we don’t have the same issue. Similarly, we must pay attention when we construct the Ids of other attributes which participate in our hierarchies like Quarter (YYYYQQ) and Half Year (YYYYHH).

Weeks

The week is a special case in the calendar. While we can say that Days roll up to Months, which roll up to Quarters, which in turn roll up to Half Years and Years, Weeks are a different story. If we are not using a special calendar like a 4-4-5 calendar, we are dealing with a real-world entity, which does not naturally belong to only one larger period. In most cases we do not have to worry about the week falling between Day and Month as business understands that this is not a very easy to work with hierarchy. However, business users very often are ignorant about the fact that weeks do not roll up nicely to years, too. We can again read ISO 8601, which also deals with the Week->Year problem. Basically, the ISO has come up with a simple solution – if we have a week which has dates in two years, we count it towards the year which contains more dates (or, the year which contains the Thursday of the week). Why is this important? Well, we can simply split a week in two, however, this means that certain weeks in our table contain less than 7 days. If we compare such weeks with a Weekly Growth calculation we will notice a problem – the amounts aggregated to them are smaller than usual. Similarly, on a graph showing weekly amounts, we have a dip as the two parts of the same week are plotted as separate points. If the users do not care, then it is not a problem, but it is something we should consider and ask about when building the week attribute in our date table.

Ranges

The date table has a limited amount of rows and we have to make a decision on how many are enough. Some developers build date tables with ranges all the way from 1900 to 2100, or even further. As we have roughly 365.25 days per year (note how 1900 is not a leap year, not is 2100 – something Excel doesn’t know), for 10 years of data we end up with ~3652 rows. With 100 years we have 36525 rows. It is quite doubtful that a data warehouse will contain data for all these years. In most cases it contains a bit of historical data and is designed to keep data for another 20-50 years. To optimise the performance of the queries using the date table it is a good idea to have a dynamic range of dates. In our ETL we can easily keep expanding the range as needed, or collapse it if we ever purge fact data. One thing which is often overlooked when picking the date range is the fact that many times queries depend on the completeness of the date range. A major mistake, which we must avoid are basing the date table on fact data and allowing gaps in the range, and having incomplete top-level periods.

The first problem is easy to explain. Zealously trying to keep the date dimension as small as possible by reducing the number of days (i.e. rows) to only the applicable ones for our fact data can introduce gaps in the overall table. In example, if we have no data for 26th of January because our business was closed for Australia Day, and we “optimise” the date table to exclude this day from the date table, all analytics which depend on counting the number of dates in January will be skewed. An average calculation doing Sales/NumberOfDaysPerMonth will divide by 30, not 31. One of the reasons for having a date table at the first place is to avoid such problems. Hence, gaps in the date table must be avoided.

Secondly, we must also ensure that all top-level periods (i.e. Year usually is the top level in the Calendar Hierarchy) must be also complete. It is not acceptable to cut off the date range with a month, so we have a constant number of dates. Even if our fact data is implemented over a fixed window of 24 months (rolling), we should not do the same with the date table. In example, if we are in September 2011 and we have fact data for 24 months prior to this (e.g. September 2009 – September 2011), the date table should also include data for months prior to that (e.g. January 2009 – September 2011). The reason is the same as before – all calculations doing Amount/NumberOfPeriodsWithinAPeriod would be wrong for 2009. Furthermore, if we use something like window functions in SQL partitioning by year and counting the months within the period to compare equivalent ones will be incorrect. The first month of 2009 would be September and the first in 2010 – January. Because of such issues, it is best to keep all top level periods complete. A solution could be removing data from the date table once we are not interested in the complete top level period. In the case of the 24 rolling months, we can remove all of 2009 with all its periods/rows once we move to 2012 and we are interested in January 2010 – January 2012.

In SSAS all date functions like ParallelPeriod and ClosingPeriod work with relative periods. To get the ParallelPeriod for January 2010 in 2009, SSAS will determine that January 2010 is the first month in 2010, go back to 2009 and pick the first month there. If the first month is September 2009, we will get that returned.

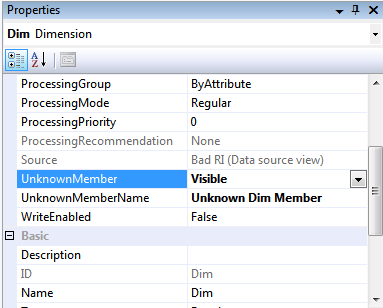

Unknowns

As with other dimensions, we should also have an Unknown member in the date dimension. While with all other dimensions we usually pick a value like -1 for the Unknown member Id and Name of “Unknown”, with a date table things a slightly different as we often have columns which are some sort of a date/time data type and we cannot cast -1 to a date in a meaningful way. Therefore, in many cases we can pick a complete outlier, like 19000101, which we use for storing Unknowns. Depending on the scenario, we may not need to do that, but if we do, there is nothing wrong with doing that as long as we make sure that it is clear that it is a special case. If the data starts in 2001 and we pick 20000101 as an unknown value many users will be wondering why there is some data against that date.

Conclusion

The best date table is the one we don’t notice and take for granted. Given that it is not something that changes at all, the structure of the date table is the one common over many different implementations and whether in SQL Server, SSAS or PowerPivot, the fundamentals stay the same. It can be shared and is part of the base of virtually every BI solution. It, unfortunately, is built wrong very often, thus severely impairing the capability of the system it is a part of. With the arrival of self-service BI I could imagine an increasing need for practical advice on this specific data modelling technique and I hope this article helps with delivering a bit of it.

You must be logged in to post a comment.